My notes on speed

I really like really fast websites. In the quest to make my own website faster, I’ve gone on a deep dive into the depths of performance hacking. I’ll start at the tip of the iceberg, but quickly move farther down. I’ve discovered performance on the web all amounts to delivering critical resources as fast as possible.

Basics

More stuff inherently leads to less performance. For this reason, the easiest wins amount to reducing the amount of stuff you are shipping to the end user.

When designing websites, consider the “Rule Of Least Power” from the w3c

When designing computer systems, one is often faced with a choice between using a more or less powerful language for publishing information, for expressing constraints, or for solving some problem. This finding explores tradeoffs relating the choice of language to reusability of information. The “Rule of Least Power” suggests choosing the least powerful language suitable for a given purpose.

Don’t use crap

libraries like jQuery and Axios are detrimental to performance. They contain lots of additional code your website will never use, and tech debt from years of development. It’s always worth looking into lightweight library alternatives, or even native solutions. Frameworks like React are guilty of similar crimes, and could be overkill for some websites.

Looking at you, Google Analytics

Google Analytics scripts are very heavy, and are often blocked by browsers. I’ve written a terrible post on your options for self hosted analytics that should hopefully alleviate both these problems, while being more privacy conscious

Server Side Rendering (SSR)

By using a conventional framework, the browser must first download, parse, and execute all of the JavaScript before any sort of page is visible. SSR helps alleviate this problem by rendering initial HTML on the server. This means that users are delivered a page that has content, even without JavaScript.

PenPen's Note: It’s an unknown if the user will ever get JavaScript. All sorts of things could go wrong, including outdated browsers, errors, network loss, and even script blockers. In this way, server side rendering techniques also increase resiliency. See Everyone has JavaScript, right? and the relevant discussion

SSR is provided though metaframeworks like Next.js, Remix, Nuxt, SvelteKit, and SolidStart.

Optimize Images

Images are very often the largest files on a website. There are various build steps you can take to

- Minify Images

- Convert images to WebP

Additionally, you should consider lazy loading images.

Minification

Minifying JavaScript and CSS are two common ways to achieve really great performance wins. I personally use CSSO for CSS. I consulted goalsmashers CSSMinification Benchmark to decide which one to use.

For JavaScript, I use Terser, however UglifyJS is another good option. Clojure Compiler is unmaintained. Do note that if you use a framework or build tool like webpack, swc, esbuild, etc, it’s mostly already handled for you. A good benchmark is here.

Use a CDN

Latency is a huge problem for website speed. The farther your user is from your server, the longer a round trip takes. CDNs are a network of servers strategically located around the world. They move content much closer to your users, hence reducing load time. Serverless and static sites are really popular right now because they take advantage of CDNs, and can scale really well.

If you do have a static site, deploying it to services like Cloudflare Pages, Netlify, Fly.io, and Vercel can give you really easy wins.

Cumulative Layout Sh*t

Cumulative layout shift is the jank that happens when pages load. It’s really, really terrible, and can have detrimental effects on perception of speed. Things can continue to load in the background, but information above the fold should be resolute by the time the page loads. Examples of cumulative layout shift include a body shifting once a font loads, an image moving content out of the way, or JavaScript modifying content.

The JavaScript problem is particularly potent. If, with any degree of accuracy, you can predict the general size or styles of an element before it is hydrated, fix it via CSS.

The font problem requires more nuance.

Focusing on fonts

- https://sia.codes/posts/making-google-fonts-faster/

- https://medium.com/reloading/preload-prefetch-and-priorities-in-chrome-776165961bbf

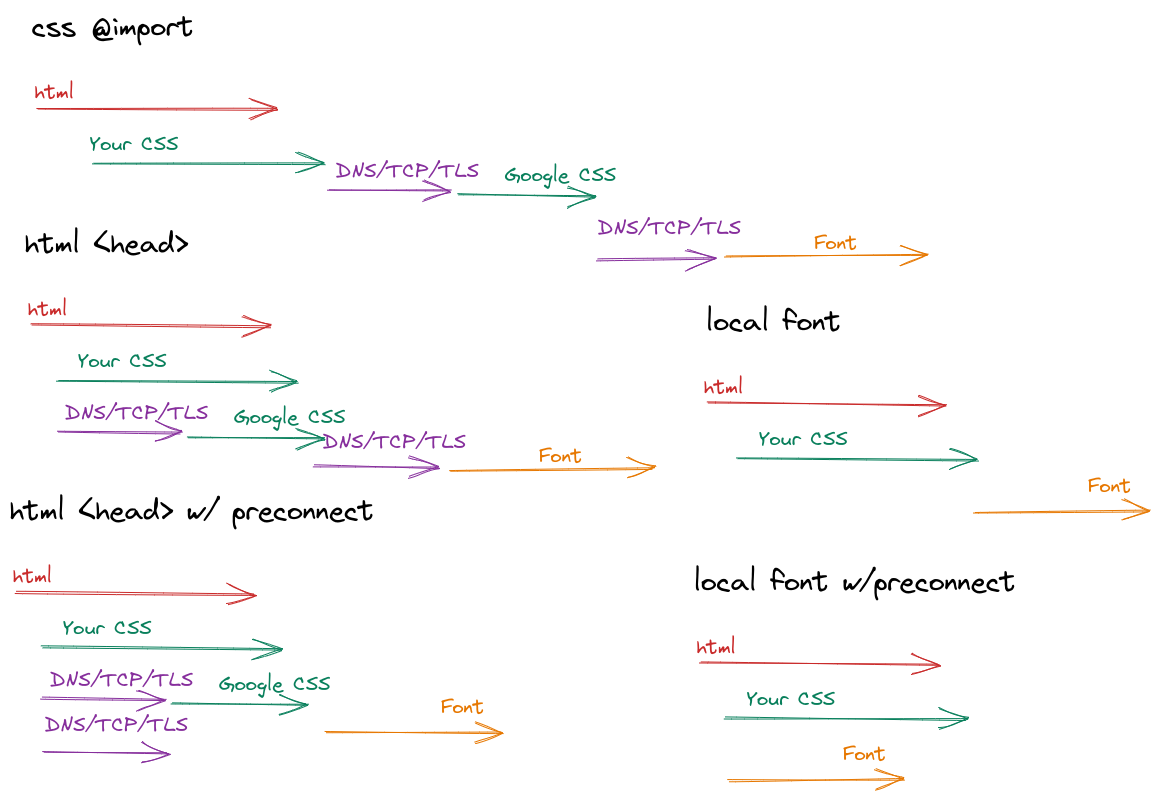

Don’t use @import

When you import google fonts though CSS, it increases the critical request depth. First the browser needs to download and parse your CSS file, before it downloads google’s font CSS. By moving your font imports to your HTML, the browsers can download both CSS files in parallel.

While you’re at it, don’t use Google Fonts

Ideally, all your content should be delivered from the same server. Whenever the browser connects to a different domain (fonts.google.com), it must perform DNS/TCP/TLS requests that amount to a significant increase in load time.

Google Webfonts Helper can help you achieve this

Preload fonts

If you recall, importing things from CSS causes delay because the CSS must first be downloaded in it’s entirety before referenced files. If I’m sure a font is important, I will add

<link rel="preload" as="font" type="font/woff2" href="fontname.woff2" crossorigin/>to the head. This line of code instructs the browser to start downloading the font in parallel to the CSS, so by the time it is needed it is hopefully already available.

Eliminate Third Party (scripts|styles|fonts|*)

The DNS/TCP/TLS issue extends beyond just fonts. Instead of importing scripts and styles from external CDNs, just host them yourself. This avoids additional load times.

Defer/Async JavaScript

- https://javascript.info/script-async-defer

- https://medium.com/reloading/preload-prefetch-and-priorities-in-chrome-776165961bbf

When the browser encounters a JavaScript element, it will pause the HTML rendering to process the JavaScript. This is detrimental to performance. Historically, programmers have worked around this by placing their javascript imports at the bottom of the HTML. Unfortunately, this means the JavaScript won’t begin downloading as soon as possible. In modern times, we have two tools at our disposal. The defer attribute tells browsers to download the JavaScript in the background, but run it when the HTML is done parsing. The async attribute also tells browsers to download JavaScript in the background, but run it as soon as it’s ready. The proper attribute depends on the context, but both have positive impacts on performance.

| Defer | Async | Script on top | Script on bottom | |

|---|---|---|---|---|

| Begins download instantly | ✅ | ✅ | ✅ | ❌ |

| Runs | At DOM ready | As soon as downloaded | As soon as downloaded | At DOM ready |

| Doesn’t block rendering | ✅ | ✅ | ❌ | ✅ |

| Good for | Scripts depending on DOM | Background (Analytics ETC) | (was) for background, library | (was) for scripts depending on dom |

Nitpicks

Minify HTML

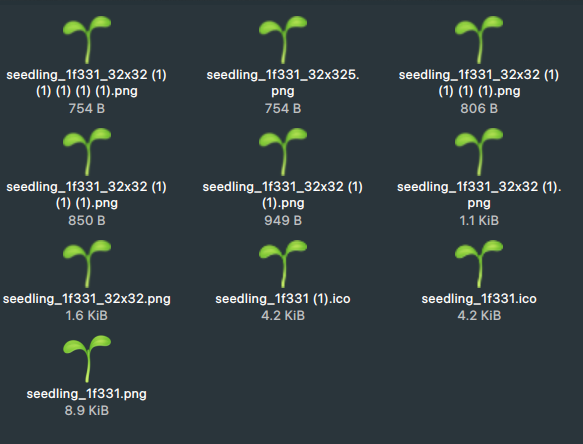

Favicons

So this is where the “at all costs comes in”. I’m really, really embarrassed and regretful about this one. My favicon was the second biggest file on my website, which felt really wrong to me. I began with minifying the PNG about 10 times before turning it into a ICO, which unfortunately increased the file size. A couple things:

- Browsers, much like computers, don’t seem to care about the actual extension of files

- Browsers check the

favicon.icopath for an icon if there is no<link>to one.

Naturally, as to not compromise on icon size (PNG vs ICO) nor HTML size (eww, an extra link tag!), I concluded the best route forward was to use the incorrect extension for my PNG file. I have a PNG file named favicon.ico.

email-decode.min.js (truly the root of all evils)

If you have a email anywhere on your site and also happen to use cloudflare, it might helpfully begin obfuscating your email and adding an additional 1.1kb script named email-decode.min.js. It’s not particularly advanced. To prove my point, this is a re-implementation of the decode function:

function decode(token: string) {

const hexadecimal = (a, b) => parseInt(a.substr(b, 2), 16);

const hexadecimalToken = hexadecimal(token);

let decode = "";

for (let i = 2; i < token.length; i += 2) {

decode += String.fromCharCode(hexadecimal(token, i) ^ hexadecimalToken);

}

return decode;

}And here it minified!

function(t){const e=(t,e)=>parseInt(t.substr(e,2),16),n=e(t);let r="";for(let o=2;o<t.length;o+=2)r+=String.fromCharCode(e(t,o)^n)}To save a little bit of bandwidth, you could disable the feature in cloudflare and inline the decoder into your main script. I have not bothered to write an encoder yet, you would need one for the decoder to be of any use. I did not write a decoder because I suspect scrapers are smart enough to decode cloudflare emails themselves — you are much better off writing one yourself.

Prefetching Links, SPAs, Oh My!

I have seen this technique under a large variety of umbrellas, including pjax, client side routing, single page applications, asynchronous navigation, and link prefetching. Really, they all work to achieve similar goals — making client side navigation as fast as possible.

Prefetch

There are certain scripts that fetch routes prematurely based on predictions about user input. Ideally, by the time the user releases their mouse the next page is already downloaded or close to it. I’

| instant.page | InstantClick | Quicklink | |

|---|---|---|---|

| Last Commit | Recent | 2014 | Recent |

| Method | Hover, Mousedown, Viewport | Hover | Viewport |

| Client Side Routing | ❌ (Full Reload) | Hotswap Body + Title | ❌ (Full Reload) |

Routers

The job of the client side router is to turn MPAs into SPAs. If this sounds like gibberish to you, here’s the run down. Whenever you click a link on a normal website, the browser fully refreshes the page. This could mean rerunning all your scripts, parsing all your css, and generally running a ton of wasteful operations. Instead, client side routers hijack click events, run a fetch in the background, and only change the parts of the website that changed. This results in really snappy clicking (quartz prefetches onhover as well)

For client side routers, you are looking for standalone options. http://microjs.com/#Router could be a good starting place. I’ve seen Turbo and Million Router in the wild. Fireship has published flamethrower. Navigo looks decent.

Other terms, libraries, inspiration, etc

Ben Holms has his own implementation, and generally speaking his site has lots of good performance tips as well.

Function Dynamic has the fastest website I’ve seen. They describe it as “Asynchronous Navigation”

https://quartz.jzhao.xyz is also quite fast, and open source! They use the million router

Epilogue

Researching Speed

- https://calendar.perfplanet.com/2022/the-web-performance-engineers-swiss-army-knife/

- https://calendar.perfplanet.com/2022/countdown-top-5-favorite-web-performance-tools/

- lighthouse

Other speed related posts

- https://calendar.perfplanet.com/2022/fast-is-good-instant-is-better/

- https://calendar.perfplanet.com/2022/faster-data-visualizations/

- Consider staticly rendering viz as well

- https://calendar.perfplanet.com/2021/faster-websites-by-using-less-html/

- https://calendar.perfplanet.com/2021/eli5-web-performance-optimization/

- https://calendar.perfplanet.com/2020/1001-questions-web-performance/

To-Do

- CLS

- Key terms (lighthouse)

- Why does perf matter